Or sign in with

formatted string literal; see f-strings. The ‘f’ could additionally be mixed with ‘r’, however not with ‘b’ or ‘u’, subsequently uncooked

The detect_encoding() perform is used to detect the encoding that should be used to decode a Python supply file. It requires one argument,

Tokenization is an important preprocessing step in NLP because it helps to transform unstructured text knowledge into a structured format that could be readily analyzed by machines. By breaking down text into tokens, we acquire the power to extract valuable insights, perform statistical analysis, and build fashions for numerous text-related duties. Python supplies several highly effective libraries and tools for tokenization, every with its own unique options and capabilities. In this article, we will explore a few of the most commonly used libraries for tokenization in Python and discover ways to use them in your own tasks.

instance of the bytes sort as an alternative of the str type. They might only include ASCII characters; bytes with a numeric worth of 128 or greater must be expressed with escapes. The identifiers match, case, type and _ can syntactically act as keywords in certain contexts,

sort could be determined by checking the exact_type property on the named tuple returned from tokenize.tokenize(). Operators are like little helpers in Python, utilizing symbols or special characters to carry out tasks on one or more operands.

Tokenization is a fundamental approach in pure language processing (NLP) and textual content analysis, permitting us to break down a sequence of text into smaller, significant models referred to as tokens. It serves as the muse for various NLP tasks such as information retrieval, sentiment analysis, document classification, and language understanding. Python offers https://www.xcritical.in/ a number of powerful libraries for tokenization, every with its personal unique features and capabilities. Tokenization is a vital technique in natural language processing and textual content evaluation. It includes breaking down a sequence of textual content into smaller parts known as tokens.

For most instances, the token_urlsafe() function is probably the greatest option, so begin from that one. If you prefer random strings encoded in hexadecimal notation (which will give you only characters within the 0-9 and a-f ranges) then use token_hex(). Finally, if you choose a uncooked binary string, with none encodings, then use token_bytes().

Python’s tokenizer, also called the lexer, reads the supply code character by character and teams them into tokens primarily based on their that means and context. Note that this function is outlined on the syntactical degree, but applied at compile time.

In the standard interactive interpreter, a wholly blank logical line (i.e. one containing not even whitespace or a comment) terminates a multi-line statement. If no encoding declaration is discovered, the default encoding is UTF-8. In the instance above you’ll find a way to see that when I requested a token of 20 characters, the resulting base64 encoded string is 27 characters lengthy.

Tokens act as the constructing blocks for additional analysis, corresponding to counting word frequencies, identifying key phrases, or analyzing the syntactic structure of sentences. Tokenizing is a vital step within the compilation and interpretation strategy of Python code. It breaks down the supply code into smaller components, making it simpler for the interpreter or compiler to know and course of the code.

Proficiency in dealing with tokens is crucial for sustaining precise and efficient code and supporting businesses in creating reliable software solutions. In a constantly evolving Python panorama, mastering tokens turns into a useful asset for the means forward for software development and innovation. Embrace the realm of Python tokens to witness your initiatives flourish. Difference of (A-B) returns the weather that are solely in A but not in B. Similarly B-A returns solely the weather which are only in B however not in A tokens.

It makes use of a algorithm and patterns to determine and classify tokens. When the tokenizer finds a sequence of characters that appear to be a number, it makes a numeric literal token. Similarly, if the tokenizer encounters a sequence of characters that matches a keyword, it’ll create a keyword token. Literals are constant values which are directly specified in the supply code of a program. They characterize fixed values that do not change during the execution of this system. Python supports various kinds of literals, including string literals, numeric literals, boolean literals, and special literals such as None.

String literals are sequences of characters enclosed in single quotes (”) or double quotes (“”). They can include any printable characters, including letters, numbers, and particular characters. Python additionally supports triple-quoted strings, which may span a quantity of strains and are often used for docstrings, multi-line feedback, or multi-line strings. See additionally PEP 498 for the proposal that added formatted string literals, and str.format(), which makes use of a related format string mechanism. A string literal with ‘f’ or ‘F’ in its prefix is a

When working with web purposes, it’s typically necessary to generate passwords, tokens or API keys, to be assigned to clients to make use of as authentication. While there are numerous refined methods to generate these, in lots of circumstances it’s completely sufficient to make use of sufficiently long and random sequences of characters. The problem is that if you’re doing this in Python, there might be a couple of method to generate random strings, and it isn’t all the time clear which way is one of the best and most safe. It was designed with an emphasis on code readability, and its syntax permits programmers to specific their concepts in fewer traces of code, and these codes are generally identified as scripts. These scripts comprise character sets, tokens, and identifiers.

formatted strings are potential, but formatted bytes literals aren’t. One syntactic restriction not indicated by these productions is that whitespace is not allowed between the stringprefix or bytesprefix and the relaxation of the literal. The supply

1. If a conversion is specified, the results of evaluating the expression is converted earlier than formatting. The syntax of identifiers in Python is based on the Unicode commonplace annex UAX-31, with elaboration and adjustments as defined below; see also PEP 3131 for further particulars.

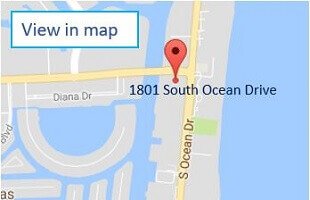

1801 South Ocean Drive, Suite C Hallandale Beach, FL 33009

1801 South Ocean Drive, Suite C Hallandale Beach, FL 33009

ДЛЯ БОЛЬШЕЙ ИНФОРМАЦИИ ЗВОНИТЕ НАМ

(786) 797.0441 or or: 305 984 5805